What is SEO?

Search Engine Optimization is the process of understanding what search engine users are looking for and how to provide their desired results in the most effective manner as possible. If understanding your audience is one side of the SEO coin, then figuring out how to best provide your content and pages in a way that they may be effectively crawled by the search engines would be the other side. The format and nature of search engines operates under the process of first crawling websites then indexing them. Search engines will then rank those websites according to various factors creating a hierarchy of results for users to click on. These results are also called SERPs (search engine result pages). Within the SERPs, there may also be SERP features. These would be the additional resources available within a particular search result that may hold a text box answering the query you may have entered. Additionally, these may also hold “People Also Ask” boxes and internal page links. Organic search results are some of the most impactful means of marketing as organic results hold more credibility and merit. Search engine users primarily click on organic results rather than paid results or paid advertisements. Approximately, only about 2.8% of users click on paid ads, the rest click on organic. However, amongst this pursuit to achieve these coveted rankings, ethical and best practice guidelines should be understood. These would be called white hat or black hat SEO tactics. White hat would consist of best practice scenarios while black hat would include tactics that are frowned upon and attempt to spam search engines.

Google’s Webmaster Guidelines:

- Always produce pages that intends to be useful for users.

- Don’t deceive your users

- Avoid tricks intended to improve search engine rankings. (a good rule of thumb is that if you would not be comfortable explaining what you did to a Google employee, don’t do it.)

- Think about what makes your website unique or valuable.

- Avoid automatically generated content

- participating in link schemes

- Avoid cloaking pages – the practice of showing an alternate page to users than crawlers.

- Participating in link schemes.

Google’s Webmaster Guidelines for Listing Local Businesses

- Be sure you are eligible for inclusion in the Google My Business index. (you must have a physical address and must serve your customers face-to-face.)

- Don’t include a misrepresentation of services not actually included.

- Don’t list a P.O. box as a place of business.

- Honestly and accurately represent all aspects of your business including it’s name, address, phone number, website address, business categories, hours of operation, and other features.

- Abusing review portions of the Google My Business listing. (fake reviews)

How can it help your business?

Search engine optimization provides some of the most credible and effective forms of marketing due to the trustworthy aspect that organic search results tend to carry. Users commonly seek to trust organic results that show on the page first page of Google rather than search results that hold an advertisement notification right next to it or pages that rank several far below. If your business or website can achieve a high and authoritative position or ranking such as on the first page, driving traffic to your website becomes a much more efficient and effective process.

What does it take to learn SEO?

As with most areas of learning ventures, SEO will require you to understand theory and be able to put theories into practice. Mentioned earlier, SEO is also a coin with one side being the process of understanding your audience and the other side being the means of recognizing how search engines crawl, index, and rank sites. You will want to read on as much material as you can regarding Search Engine Optimization and then put those new forms of information to use by practicing on a website. Finding the necessary material may be done here and examining the XP Journal along with the additional resources would be an invaluable step to take to learn this subject.

Crawling, Indexing, and Ranking

Search engines have a particular set functions that allow them to orchestrate a well arranged collection of page results. These consist of crawling, indexing, and ranking. It is important to note that 90% of all search engine queries come from Google.com. So we will be primarily focusing on Google search literacy.

Crawling is the process of searching and discovering the content of new web pages. This occurs through the endeavor’s of something called search engines crawlers. These crawlers scour the web for updated or new pages and content on the web. Content in this aspect can vary by including webpages, images, video, PDF, etc. They do this by following links on indexed websites which may lead to new pages to be discovered. However, it is also possible to stop crawlers from finding all or certain pages of a website. You may block search engines from crawling certain pages that may not be useful to users.

Indexing is the means of storing and recording the crawled web pages in a directory which may be accessed to display on SERPs. In order to see if your website is indexed and displayed on the public web, you may use an advanced search operator on Google such as – site:”example.com”. This would display the entered website and almost all of its indexed pages. A more accurate way someone may find out if their website has been crawled and indexed is by using Google Search Console which will notify you of any and all indexed or non-indexed pages. With this tool, you have the ability to submit sitemaps and monitor which pages have been crawled and indexed or not.

Ranking is the process of arranging these web pages in a manner that is most relevant and helpful to users. With this understanding you may be able to assume that the majority of the websites you comes across that show up in higher ranked positions contain more relevant and authoritative content.

Some possible reasons for your site not showing up in search results:

- Your site is brand new and hasn’t had the chance to be crawled yet

- Your site contains some basic code called crawl directives that blocks crawlers from accessing your website

- Your site’s navigations is confusing for crawlers to follow

- Your site may be penalized for spam tactics

- Your site may not be linked to from external websites

However, there are some pages you would not want Googlebot to find and that would be old URLs with thin content or duplicate URLs (such as sort and filter parameters). To stop Googlebot from crawling and indexing certain pages of your site, you would use the robots.txt file. These files are found in the root directory of your website and they inform crawlers which pages to crawl. Googlebot will scan your robots.txt file and determine which pages to crawl and which ones to not. However, because these files are located at the root directory, it is important to not leave private pages available to find by spammers. It is of benefit to apply private pages like these behind logins or noindex tags. If Googlebot does not find a roobots.txt file, it will proceed to crawl that site. If it finds a robots.txt file, it will follow the directives accordingly and if Googlebot encounters an error it will not crawl the webpages.

This also brings the idea of crawl budget. Search engines will crawl a certain number of links before leaving your website to crawl another. With that, it is important to optimize your site’s crawl budget and use the proper directives as to make sure all necessary pages on your site are bring crawled. You just want to make sure you are not blocking Googlebot from crawling the important pages of your site. For instance, 301 redirects, noindex, and REL = canonical are useful measures to use when calibrating crawl budget. The 301 redirects are implemented when you want to redirect page to alternative page. These would be useful for 404 pages. A noindex tag is used when you want to block the page or content from being stored in Google’s index. The REL = canonical may be used when you want to inform crawlers of the canonical version of a webpage, such as a page with URL parameters. URL parameters are additional URLs within a page. These are most commonly found on e-commerce websites that have several different product pages that need to direct everything to one canonical page and make the same content available on multiple pages by appending certain parameters to URLs.

Some important questions to ask yourself to discover whether crawlers can crawl through your entire page would be:

- Can crawlers find all your important pages? – Ask yourself: Can the bot crawl through your website and not just to it?

- Is your content hidden behind login forms? – If you require login forms, survey answers, or have to fill out forms before accessing content, search engines wont be able to crawl certain pages.

- Does your website rely on search forms for navigation? – Robots can not use search forms.

- Is text hidden within non-text content? – Non-text media forms such as images, video, PDFs cannot be properly crawled for text.

- Can search engines follow your site navigation? – Just as a crawler needs links pointed from external websites to your own site, internal links within your own website pointing to other pages is also important.

Some common navigational mistakes that people make that keep crawlers from accessing their entire website:

- Making their mobile navigational links appear differently than their desktop navigational links.

- Showing various results to certain users may seem like cloaking to search engines which is a spammy tactic.

- Using Javascript -enabled navigation rather than HTML.

- Not linking a primary page on your website through your navigation.

Another important consideration to make is information architecture. This would be the usability of your sites navigation for users. The manner in which they are organized and labeled making it more intuitive for users to navigate through. These consideration would make it much more likely that your full website is crawled appropriately. However, a method to ensure your website is granting search engine crawlers to find your pages would be to submit a sitemap on Google Search Console. A sitemap would be an assortment of URLs to allow crawlers to find all important pages. This would be another useful measure to take, although it is not a substitute for proper page navigation within your website. You also want to be sure that you are only providing the URLs that you know want to be crawled and that you are giving consistent direction. In addition, don’t include URLs that you have blocked on robot.txt on your sitemap and don’t include duplicate content on the sitemap, rather than the canonical version.

When auditing your site’s capacity to be crawled, you want to be aware of any crawl errors. You can check this my going to Google Search Console and checking the tab “crawl errors”. Some of the common error codes you may come across are the 4xx codes and the 5xx codes. 4xx errors indicate a client error such as a search page not existing or poor syntax. One of the most common 4xx messages is the dreaded 404 error. This usually associated with missing pages or incorrect syntax in the query. The 5xx error pertains to server errors. So this may usually be associated with the idea of the files that are searched for cannot be found at the server where they are located.

One of the most effective ways to deal with certain error codes or missing pages is with the 301-redirect. This appended code allows you to redirect a page result to an alternative page. Some practical benefits of doing this include:

- Passing on link equity to an alternative page

- Helps Google index the new page

- Ensures a better user experience

As useful as the 301-redirect may be, you want to avoid what is known as redirect chains. These would be a chain of 301-redirects that link to multiple pages. For instance, if example1.com redirects to example2.com which redirects to example3.com, it is better to remove the example2.com from that redirect chain.

After ensuring that search engines can properly crawl your website, the next step would be to make sure that your website can be indexed. Just because your site can be crawled, does not mean that it will be stored in the index. You can check to see how the Googlebot crawler sees your page by checking the cached version of it. This will give you a snapshot of what Googlebot saw when it crawled your site. On the SERP click on the three dot icon and select “cached”, this will display the cached version. The frequency that Googlebot crawls sites varies. Well established sites get crawled more often while newer sites get crawled less frequently.

It is important to understand that pages can also be removed from the index. Just because your site has been stored in the index, does not mean it will stay there. Here are some of the reasons why a site may be removed from the index:

- The page has been returning 4xx or 5xx. This could be intentional by a page being deleted and 404ed or accidental by a page being moved and forgotten to be redirected.

- The URL had a noindex metatag

- The URL has been manually penalized for violating search engine guidelines.

- The URL has been blocked by crawlers due to password requirements.

If you suspect that your URL has been blocked from the index, one way you can check is by using a URL Inspection tool or Fetch as Google which can request indexing. Google Search Console’s fetch tool has a render option that allows you to see if there are any issues with how Google is interpreting your page.

Robots meta directives are pieces of code placed in thesection of your website’s source code which provides directions to crawlers on how they should regard your URL. You can also you x-robots-tag which may allow you to block search engines at scale with flexibility and functionality. You can block non-HTML files, use regular expressions, and apply site-wide noindex tags. The following are the most common meta directives:

- index / noindex – tells the search engines whether they should include the URL in their index or not.

- dofollow / nofollow – tells the search engines whether to pass link equity or not from the links associated with the page.

- norachive – restricts search engines from storing cached versions of your site. For example, if you run an e-commerce site, you may want to instruct Google not to store cached versions of your site.

To understand the transaction between web servers and clients, it is important to understand what HTTP. HTTP stands for Hyper Text Transfer Protocol, it is the primary application layer protocol in fetching resources such as HTML documents. With this, there are HTTP Methods:

- Get – retrieves data from the server

- Post – Submit data already on the server

- Put – Update data already on the server

- Delete – Deletes data from the server

It is also important to go through all the HTTP Status Codes:

- 1xx – Informational: Request received / processing

- 2xx – Success: Successfully received, understand and accept

- 3xx – Redirect: Further action must be taken / redirect

- 4xx – Client Error: Request does not have what it needs

- 5xx – Server Error: Server failed to fulfill an apparent valid request

*Wordpress tip: On WordPress you can go to setting > reading > and make sure Search Engine Visibility is not checked. This would block search engines from coming to your site via robots.txt file.

Let’s talk about Ranking. Search engines use algorithms, a process of storing and sorting through data to provide it in meaningful ways. These algorithms are constantly being changed. If your website has suffered or gained ranking positions, if may be due to the modifications of the search engine’s algorithm. It is notable to compare it against Google’s Quality Guidelines or Search Quality Rater Guidelines, both are very telling in terms of what search engines want.

Link building is another critical component to the idea of search engine ranking. There are two kinds of links when referring to the process of link building:

- Inbound / Backlinks – (site A) —-> (site B)

- Internal Links – (site A) —–> (site A)

Backlinks work very similar in relations toward word of mouth referrals. Referrals from a good site are a welcoming sign of authority. Referrals coming from yourself may be biased, so they are not a good sign of authority. Referrals from irrelevant or low-quality websites is a bad sign of authority. Having no referrals is an unclear sign of authority. Page rank estimates the importance of a webpage by measuring the quality and quantity of links pointing to the site. The more natural backlinks a site has from high authoritative sources, the better chance of the site ranking higher.

The role that content plays in SEO is a fairly large one. Content can be seen as anything that users can consumer such as, images, videos, and of course text. If your content is relevant to the user’s query, it will be more likely to be positioned higher on the search results. You should ask yourself, does this match the words that were searched and does it help fulfill the user’s intent? Along with hundreds of new ranking signals that have emerged, the top three have remained consistent. These would be:

- Links to your website (which serve as third party credibility signals)

- On-Page Content (quality content that fulfills a searcher’s intent)

- Rankbrain

Now, I am sure you are asking what is Rankbrain? Rankbrain is the machine learning component of Google’s algorithm that constantly gathers and interprets data to better operate its intended actions to rank websites. Machine learning is a computer program that continues to improve its predictions over time through new observations and training data. So essentially, Rankbrain is always learning and because it is always learning it will adjust search results on a continues basis depending on which URLs are more relevant. Regardless of the recognition of Rankbrain and it’s impacts, many in the industry still do not fully understand how it works. Due to this matter, the best practice we can do as SEOs is to provide the most relevant and useful content for users to fulfill their query intents.

Engagement metrics is the data that represents how searchers interact with your site from search results. A well known concern regarding these metrics is whether they are a cause, correlation, or both to the impacts of ranking. Typical question that come up in regards to engagement metrics are whether sites are ranked highly because they possess good engagement metrics or if good engagement metrics are indicative of highly ranked sites. These engagement metrics would include:

- Clicks – visits from search

- Bounce Rate – the percentage of all website sessions where users only viewed one page)

- Time on Page – amount of time the visitor spent on a page

- Pogo Sticking – clicking on an organic result and quickly returning to the SERP to look for more results

Google has mentioned how they perceive and consider engagement metrics. They have made it clear that they use click data to modify the SERPs. It’s seems inevitable that engagement metrics are more than just a correlation however Google has also said that engagement metrics are not definitive ranking signals. But because rankbrain is always changing and learning, your website may change its ranked position without any alterations to your content or page. It is important to understand that objective factors like link building and content first rank the page, then Google uses engagement metrics to help adjust it if they didn’t get it right.

The evolution of the SERP started off with just 10 blue links as Google’s flat structure. Through time, Google has implemented SERP features. These include:

- Paid Advertisements

- Featured Snippets

- People Also Ask Boxes

- Local Map Packs

- Knowledge Panels

- Site Links

As helpful as these were for the users, they caused two main problems for SEOs. They caused organic results to be pushed down the SERP and they caused fewer people to click on links because their queries were already being answered on the SERP itself.

Here is a list of the query intents along with the possible SERP features that may be triggered.

- Query Intent Possible SERP Featured Triggered

- Informational ——————– –> Featured Snippet

- Informational with one answer –> Knowledge Graph / Instant Answer

- Local ———————————> Map Pack

- Transactional ——————— -> Shopping

Localized Search is another concept to be aware of when discussing crawling, indexing, and ranking because Google has it’s own proprietary index of local business listings for which it creates local search results. One of the most important steps when performing local SEO work for a business with a physical location is to claim, verify, and optimize a free Google My Business listing. When it comes to localized search, Google uses three main factors to determine ranking:

- Relevance – how well the business matches what the consumer is looking for

- to ensure the business is doing everything it can to become relevant to searchers, make sure all the business information is thoroughly and accurately filled out

- Distance – Google uses geo-location to better serve your local results

- local search results are extremely sensitive to proximity, which is the relative position of the user to the business location

- Prominence – Google looks to reward businesses that are well-known in the real world

- The number of Google reviews and their sentiment is the primary way Google will rank a local business’s prominence.

Business citations are also a necessary step to consider when optimizing local search listings. A “business citation” or “business listing” is a web-based reference to a local business “NAP” (name, address, phone number) on a localized platform such as Yelp, Acxiom, YP, Infogroup, Localeze, etc.). When a business has multiple different business citations with consistent NAP references on several different platforms, it allows Google to trust it more and present it in the SERP with more confidence. However, it is also important to remember then the standard organic ranking practices are still vital for local business listings as well.

Google is constantly improving it’s recipe for improved search engine experiences. Real-world engagement metrics are also being monitored and counted towards local search result rankings. This form of interaction with the local business is how users can respond and interact with the business as well and is less game-able than the static information like links and citations. Since Google is looking to deliver the most relevant local businesses to searchers, it makes sense to use real time engagement metrics.

Keyword Research

- What are people searching for?

- How many people are searching for it?

- In what format do they want that information?

- Who is searching for these terms?

- When are people searching for sandwiches, lunch, burgers, etc.?

- What types of sandwiches, lunch, burgers, etc. are people searching for?

- Are there seasonality trends throughout the year?

- How are people searching for sandwiches?

- What words do they use?

- What questions do they ask?

- Are most searches performed on mobile devices?

- Why are people seeking ice cream?

- Are people looking for health-conscious ice cream specifically or just looking to get a meal?

- Where are potential customers located-locally, nationally, or internationally?

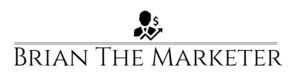

The Search Demand Curve

Don’t underestimate these less popular keywords. Users who search for these long-tail keywords tend to convert better because of their highly specific nature and are more intentional in their searches. For instance, if a user searches for “computers” their searcher intent may range from wanting to learn which computer to buy, to how computers work, to when computers were built. However, if a user searches for “best priced 13 inch apple laptop with 8 GB of memory”, they practically have their wallet out!

Questions are also SEO gold. Discovering what questions people are asking can drive may yield great organic traffic for your website. You can create an FAQ page and add these questions to your page along with the discovered keywords.

Investigating your competitors and what keywords they are ranking for may also aid in the process of finding strategic keywords. You can either choose to select the same keywords they chose due to their record of effectiveness or find keywords they are not using for low hanging fruit opportunities.

Understanding seasonal and regional keywords can also breed advantageous results. You can discover keywords that are highly searched for around particular seasons and prepare content months in advance and give it a good push around those periods. Uncovering certain keywords throughout regional areas may also reveal circumstances to highlight opportunities available within a certain city or location. Google trends may be helpful in this process as you can uncover “interest by subregions”. Geo-specific research can also help you tailor your content more appropriately for your audience. For instance, the term sub-sandwiches would be a common reference in many parts of America, however, in Philadelphia the term would be called hoagies.

To find out how your users want their search queries to be answered and what type of content you should create, look to the SERPs and the SERP features. The format in which Google chooses to answer queries depends on intent and every query has a unique one. It would be clever to study the SERP for the keyword you are looking to target before creating the content for it. Shopping carousels, Local Packs, refine by, are all SERP features Google uses to better answer user queries. Google explains these intent in their Quality Rater Guidelines.

- Informational – The searcher wants to find out information, such as name of a brand or how large the Grand Canyon is.

- Navigational – The searcher is looking for a particular website, such as typing Google.com

- Transactional – The searcher wants to do something, such as buy a product or listen to music.

- Commercial Investigation – The searcher wants to get information regarding a potential purchase, such as comparing separate products or finding the best price.

- Local – The searcher wants to find a local store, such as a nearby coffee shop, doctor’s office, or music venue.

Tools are also a necessity to do proper keyword research. Here are some of the fundamental tools an SEO can use for keyword research:

- Moz Keyword Explorer

- Google Keyword Planner

- Google Trends

- AnswerThePublic

- SpyFu Keyword Research Tool

On-Page SEO

On-page SEO is the multifaceted field that extends beyond just content and into other aspects like schema and meta tags. As such, understanding how to create your content and actually employ the keywords from your keyword research is critical. You should first be aware of what shows up in the SERPs for your target keyword. Take note of what kind of content is presented. Some characteristics of ranking pages to take note of would be:

- Are they image / video heavy?

- Is the content in a longer form or short and concise?

- Is the format in lists, bullets, or paragraphs?

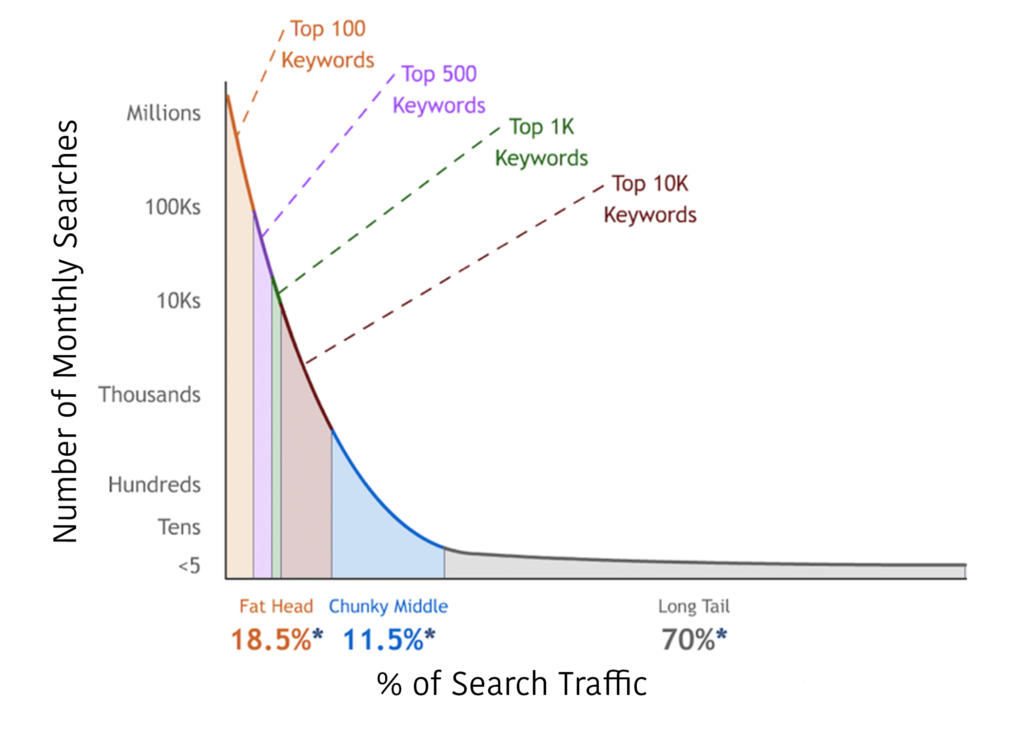

Survey you keywords and select and group those with similar topics and intent. Those groups will be your keywords for your pages, rather than creating a page for each keyword variation. After the keyword arrangement has been complete and the SERP has been analyzed, ask yourself, “what unique value could I offer to make my page better than the pages that are currently ranking for my keyword?”

Your web content should exist for the primary purpose of answering the searchers query, guide them through your site, to help them understand your site’s purpose and provide the best value possible. With these high-value tactics assumed and practiced, it is also important to avoid the trap of some low-value tactics.

Thin content was an older content strategy that was used to create a page for every iteration of your keywords in order to rank 1 on those highly specific queries. This spreads your content thin across many of your pages. Google is very clear that you should have comprehensive topic pages that may incorporate a collection of similar keywords rather than variations of pages with thin content. They addressed this in their 2011 update known as Panda.

Duplicate content is also another type of low-value tactic that refers to content that is shared between domains or multiple pages of a single domain. Scraped content is a step up from that, in that it is the unauthorized use of content copied from another website. This can include taking content as-is and republishing it or make slight adjustments to the content and publishing it. However, because there is multiple legitimate reasons for internal or cross-domain content, so Google encourages the use of a rel=cononical tag to point to the original version of the web content. But the overall rule of thumb would be to keep your content unique in word and in value. Also necessary would be to debunk the “duplicate content penalty” myth. There is no Google penalty for duplicate content. For instance, if you were to copy an article from the Associated Press and post it on your own site, Google would not penalize you for it but it would filter duplicate versions of the content form their search results. If two or more pieces of content are substantially similar, Google will present the canonical URL and hide the duplicate version. However, this is not a penalty, it just Google’s method to filter the search results and show only one version of the content.

A basic rule of search engines is to show the same content to search engine crawlers that you would to human visitors. That means that you would never hide text in the HTML code of your website where normal visitors would not be able to see. If Google detects that your page is hiding or presenting alternate forms of content to human visitors and search engine crawlers, they will penalize the site and prevent the page from ranking. Another side note regarding cloaking is that Google prefers pages with fully visible articles on the page, meaning that you should aim to prevent having the “click here to read more” buttons if you can.

Keyword stuffing was another common method to try and exploit the qualifications of highly ranked websites. This low-value tactic attempts to rank for keyword by stuffing and packing content on a website with the target keyword. Although Google looks for mentions of keywords and related concepts on your site’s page, the page itself has to provide value for the users. If a page’s content sounds like it was written by a robot, then it won’t rank well in the SERPs.

This then brings us to the low-value tactic of auto-generated content. Auto-generated content refers to articles and words strung together by a program rather than a human being. It is also worth noting that advancements in machine learning will contribute to this tactic and improve the results of aut0-generated content. It would be favorable practice to avoid this low-value tactic as well as it does not support the probability of effective and valuable content for users.

So what should you do instead? 10x it! It is a critical understanding that there is no secret sauce to SEO. Your main focus should be to provide the best answers in the most suitable format for your users and their questions. It is not enough to just avoid the low-value tactics but it is also imperative to provide great content with intentions to provide high value to visitors of your site. A useful formula to recognize and adopt can be presented as follows:

- Search for your keyword to find the type of content that shows up

- Identify what format the content is presented in; text, image, video, etc. and which pages rank highly for those keywords.

- Determine what you will create through planning and preparation

- Create content that is better than the competitors.

If you can manage to create content that is 10x better than the competitors, Google will reward for it. Don’t worry about how long or short your content is, there is no magic number. Just focus on answering queries in the most effective and meaningful manner. It would also be worth considering what content on your site is already ranking well. Sometimes there is no need to reinvent the wheel.

A note for NAP. It would be advantageous to consistently display your name, address, and phone number through out your site. These are often displayed in the header and footer of a site. A worthwhile implementation would mark up this information using local business schema. If you are a multi-location business, it would be wise to create a separate page for each location. If you have hundreds or even thousands of locations, applying a store locator would be beneficial for user experience.

In addition, take some time to think about whether your business accommodates on a local, national, or international level. Local business operate on a local level. National businesses accommodate to customers on a national level. International businesses provide their products and services to customers on an international level. Understanding which category your business fall under will allow you to tailor your NAP appropriately.

Aspects of on-page optimizations that go beyond the content can have favorable affects toward your search traffic as well. Header tags would be one of these optimizations. The header tag is the tag you would place in your HTML code which would signify what the main headline of the page is. These tags range from h1 – h6. H1 being the most important header to h6 being the least important. Your H1 tag should contain your primary keyword. Header tags should not be used non-headline purposes such as for navigation titles or phone numbers.

Another important element that should be inspected is your internal linking structure. Internal links allow search engine crawlers find all necessary and important pages of your website. With every internal link, you pass link equity to the linked page and also helps your visitors navigate through your site. Links that require a click for visibility and accessibility are often hidden from search engines, so it would be wise to append more appropriate links if the only ones you have are hidden by a drop-down menu.

Anchor texts should be explained and understood so that they may be used appropriately and to your websites utmost benefit. The anchor text is a peace f text that links a page to another page. It also signals to search engines what the content of the destination page may be about. If the anchor text is “lear SEO”, then that is a good indicator to search engines that the destination page will contain content regarding learning SEO. However, be careful. Too many internal links containing the same keyword may come across as a spammy tactic to search engines. So it would be proper practice to use anchor texts and internal links in a natural way. In Google’s Webmaster Guidelines they say to limit the number of links to a reasonable amount. Too many links won’t get your penalized but it will affect how Google find and evaluates your pages. As previously mentioned, links directed to other pages pass link equity. Page’s only have a certain amount of link equity they can pass, so the more internal links on a page, the less equity each link will possess to pass. Too many links may also come across as overwhelming to your readers.

Images are one of the biggest culprits of slow pages, so it would be worth examining and assessing your assemblage of images on your webpage and optimize them for faster sites. One of the best ways to do this is with image compression. These would consist of various options like, “save for web”, image sizing, and using compression tools like Optimzilla or ImageOptin. You should evaluate each option and dictate which route would be most suitable for your website. Thumbnails can also be a huge speed slow down, especially for e-commerce sites. Here is also a quick reference to what format you should use for certain image types.

- If your image requires animation, use GIF

- If you don’t need to preserve high resolutions for your image, use JPEG (and test out different compression settings)

- If you do need to preserve high image resolution use PNG (if a lot of colors are presented, use PNG-24 and if not a lot of colors are presented, use PNG-8).

You may have heard of alt text. If not, alternative text is a principle of web accessibility and used to describe images to the visually impaired via screen readers. Search engines also crawl alt text to get a better idea of what the image displays. This gives search engines better context about the image. Ensure alt descriptions reads naturally for people and avoid stuffing keywords in to manipulate the search engines.

Another site optimization that would improve the cohesion of your images and your overall site would be to submit an image sitemap. This would ensure that Google can crawl and index all your pages and images. This also may help Google discover images that may have otherwise been missed. You can submit a sitemap in your Google Search Console account.

It has been clear now that a user friendly website is a must if you want to perform well within the SEO arena. Due to this, we will address formatting for readability and featured snippets. Your page could contain well structured and valuable content but if it is not formatted correctly, you audience may never read it. Follow the following readability principles for impactful content that reaches your audience efficiently.

Text Size and Color

- Avoid fonts that are too tiny

- Google recommends 16 point font and above. You want to minimize the pinching and zooming on mobile.

- The text color in relation to the background color should be readable.

Headings

- Break up your content into helpful headings.

- This can help readers navigate through the content.

Bullet Points

- These are great for lists

- Bullet points can also help readers skim and find information they are looking for more quickly.

Paragraph Breaks

- Avoiding walls of text can help prevent page abandonment and encourage site visitors to read more of your page.

Bold and Italics for Emphasis

- Adding emphasis to words with bolds and italics can act as a distinguished signal to highlight some sentences or words.

Paying attention to formatting allows for the opportunity to achieve featured snippets much more likely. In addition, taking note of query intent aids in the structure for snippets. Understand what your audience is exactly looking for so that you may properly provide the most fitting content for them.

Title tags are the descriptive element that states the title of the page. These are nested in the head tag within and may look something like:

Each page should contain its own unique title and what you put into the title tag will show up in the SERPs and on the tab of the browser. The title tag holds the ability to create a meaningful first impression, so it’s important to choose a title that may induce users to click. The more compelling your title tag is, the better. The title tag ensures that users found a relevant page, however, it does not show up on the actual web page. The header tag reassures them that they found the content that they were looking for.

So what makes an effective title tag? Placing your target keyword within the title tag is always a worthwhile step as well as positioning it as close to the front of the title as possible. You want to also try to confine it to the 50 – 60 character mark as that is the amount of characters Google will usually display in its SERP. However, don’t sacrifice quality of the title tag just to fit it into the 50 – 60 character count. Be mindful of what you are trying to rank for and optimize for that.

Meta descriptions describe contents of the page that they’re on and are also nested within the head tag of the HTML code. Meta descriptions may look something like this:

description of page here”/>

Whatever you input into the description field will show up in the search results below the title of the page. Sometimes Google will display an alternate description but don’t let this deter you from writing your own. The descriptions should highly relevant to your page and although they do not directly influence rankings, they may improve click-through rates.

What makes an effective meta description? As mentioned, it should be highly relevant to your page and also summarize the brief concept of the content presented on the page. It should provide enough information so that the user knows that it is relevant toward their query but no so much to the point where there’s no need to click anymore. It should also contain the your primary keywords. Search engines typically allow for 150 – 300 characters so it would be best to tailor your meta description to fit that amount.

URL stands for uniform resource locator. It is the location and address of a webpages and it’s content. URLs are displayed in the SERPs under the title so it is important to put some time into arranging them properly and appealingly. The construction of your URLs may affect click-through rate and search engines also use them for evaluating and ranking.

Search engines require that each page contains unique URLs and clear page naming and structuring may help users understand what the URL is about. Users are more likely to click on URLs that reinforce the idea that they found the content they were looking for.

If you talk about multiple topics, you should avoid nesting topics under irrelevant folders. The folder in which you nest your topics may also signify the type of content you are presenting. A URL with time sensitive content may be beneficial for news related sites, however may also indicate outdated content as well. With this presumed, users look at what folder the URLs are in to indicate they are in the right location within the site and to clarify what the topic is about.

Studies show that shorter URLs appeal to users more. Too long URLs may be cut off by ellipsis, so it would be in the benefit of the website and the user to lean towards shorter URLs. You may be able to limit the URL length by including fewer words or removing unnecessary folders within it. However, descriptive URLs are important, so you should not be sacrificing effective descriptiveness for shorter URLs.

If your page is targeting a specific keyword, it would be wise to place that keyword within your URL. Just make sure to not go overboard with it and be mindful of repeated keywords in other folders as this may make your URLs seemed stuffed.

URLs should also be easily read so try to avoid placing numbers, symbols, or parameters within them. Not all web applications interpret separators like ( _ ) or ( + ) equally. Search engines also do not know how to separate words in URLs. Due to this, use the hyphen character ( – ) when needing to separate words.

Sites should avoid a mix of uppercase and lowercase letters. To adjust for this, apply a rewrite formula to something known as the .htaccess file which will automatically make any uppercase URLs lowercase.

It is vital that local business websites’ make mention of city names, neighborhood names, and other regional descriptions in it’s content, URLs, and other on-site assets.

It’s recommended that websites have a secure protocol (HTTPS). To ensure your URLs are using the HTTPs protocol, you must obtain an SSL. They ensure that any data passing through the browser and server stay private.

Technical SEO

Technical aspects are important to understand so that you may be able to intelligently communicate them with developers. The fundamentals of search engine optimization and the technicalities of web development go hand-in-hand, so SEO’s beed cross team support to be effective. Rather than waiting for a problem to arise then solve it, you should set up the infrastructure early on as to avoid the problems all together. In order to have web pages prepared for the visitations of both humans and crawlers, you need technical optimization understandings. You should understand:

- How websites work

- How search engines understand websites

- How users interact with websites

A website’s journey from domain name purchase to a fully rendered website is important because the assemblage of the page may affect how quickly the website is loaded which is highly evaluated by search engines and considered in their ranking. Google also renders JavaScript files on a ‘second-pass’, which means that they first crawl HTML and CSS files first and then crawl Javascript files a few day to a few weeks later. This would mean any vital SEO assets not implemented through HTML may not get indexed.

This section will teach you how to diagnose where your website might be inefficient, what you can do to streamline it, and the positive results on your rankings and experience.

Before a website can be accessed it needs to be set up. The first step in this process occurs when the domain name is purchased from a domain name registrar such as GoDaddy or HostGator. These registrars are just organizations that manage the reservations of domain names. Domain names are linked to IP addresses as the internet does not understand names like brianthemarketer.com as a website without the assistance of the DNS, also known as the domain name server. The internet uses whats called an Internet Protocol or IP. This is the address of a website and it might look like ( ex: 127.0.0.1 ) but we want to use names as they are easier for humans to read. We need to link those human-readable names with machine-readable numbers. ( Domain Name ) —> ( DNS ) —> ( IP )

- User Requests Domain – Now that the domain is purchased and linked to a IP via the DNS, users can request it by typing it in a browser for by clicking a link.

- Browser Makes Request – The browser will then make a lookup request to the DNS to retrieve the appropriate IP and subsequently a request to the server to retrieve the code that your website is constructed with, such as, HTML, CSS, and Javascript.

- Server Sends Resources – Once the server receives the request for the code, it sends over the resources to be assembled in the web browser.

- Browser Assembles the Web Page – The browser now has all the necessary resources but it still needs to assemble the webpage. As it parses and organizes all the web page’s resources, it’s creating a Document Object Model (DOM). The DOM is what you see when you right click and select “inspect element”.

- Browser Makes Final Requests – The browser will only present the site once all resources are parsed and executed. If all resources are not yet available, it will make additional requests until it has collected all of the code.

- Website Appears in Browser – After all that, your website has now been rendered into what you see in your browser.

You can shorten your critical rendering path by setting up scripts to “async” when they’re not needed to render content above the fold. Async tells the DOM to continue to assemble the code while the browser continues to fetch the necessary scripts to display your web page. If the browser has to pause every time it needs to fetch the necessary scripts (called render-blocking scripts), it can substantially slow down page loading speed. Other optimizations that your dev can implement is to shorten the critical rendering path by removing unnecessary scripts.

Websites are made of programming languages which are used to construct web pages. The three most common codes would be:

- HTML – What a website says (title, body, content, etc.)

- CSS – How a website looks (colors, fonts, etc.)

- JavaScript – How a website behaves (interactivity, dynamic, etc.)

HTML is what the website says, you can think of it as the writer. HTML stands for hyper text markup language and it is the backbone of the website. Elements like headings, paragraphs, lists, and content are all defined in HTML. It’s important for SEO’s to know and understand HTML because it is what lives under the hood of websites and is also the primary element Google crawls to determine how relevant a webpage is to a particular query.

CSS constructs what a website looks like, you can think of it as the painter. CSS stands for cascading style sheets. It is what causes fonts, colors, and layouts. With CSS, websites can be styled without the use of manually adjusting the code into HTML for each web page. It wasn’t until 2014 where Google started to index pages like an actual browser, rather than just text-only. Prior to this, an old black-hat tactic was used to manipulate search engines which was to hide text and links via CSS.

SEO’s should care about components of CSS due to the fact that since stylesheets can live in external stylesheet files instead of your page’s HTML, it makes your page less code-heavy thus improving load times. Browsers also still have to download resources like your CSS file, so compressing them can make your webpage load faster. Having your page be more content-heavy than code-heavy can lead to better indexing your site’s content. Just make sure not to hide links and content using CSS as that can get you manually penalized and removed from Google’s index.

Javascript is how a website behaves and brings life to a page, you can think of it as the wizard. Once javascript were not only able to have structured style but were also brought with the capability of having dynamic elements implemented. When someone accesses a page enhanced with javascript, that programming language will interact with the static HTML that the server returned, resulting in a webpage that comes to life with some sort of interactivity. It could create a pop-up, for example or it could request third-party resources like ads to display on your page.

As favorable of an effect that Javascript can provide, it can also pose some problems for SEO. Search engines do not view Javascript the same way as humans do due to client-side versus server-side rendering. Most javascript files are executed on the client-side, however, some executed with server-side rendering fetch the files on the server and the server sends them to the browser in their fully rendered state. SEO-critical page elements such as texts, links, and tags are invisible to search engines if they are loaded on the client’s side with javascript rather than in your HTML. This means that search engine crawlers won’t see what’s in your javascript, at least not initially. Google mentions that as long as you do not block Googlebot from crawling your javascript files, they should be able render and understand it the same way that a browser would. However, because of this “second pass” form of indexing, they may miss certain elements that are only available once javascript has been executed.

Here are some possible problems in Googlebot’s process of rendering your web page:

- You’ve blocked Googlebot from javascript resources

- Your server can’t handle all the requests to crawl your content

- The javascript is too complex or outdated for Googlebot to understand

- Javascript doesn’t “lazy load” content into the page until after the crawler has finished with the page and moved on.

Understanding how search engines read websites allows SEOs and developers to implement schema markup. Schema is a way to label or organize your content so that search engines obtain a better understanding of what certain elements on your page are. This kind of code provides structure to your data which is why schema may commonly be referred to as “structured data”. In addition, the process of structuring your data is referred to as “markup” because you are marking up your data with organizational code. The preferred schema markup of Google is JSON-LD, which Bing also supports. After setting it up, you can test your structured data with Google’s Structured Data Testing Tool.

Schema can also enable special features to boost your success in the SERPs.

- Top Story Carousels

- Review Stats

- Sitelinks Search Boxes

- Recipes

In addition, there are some additional points to consider to obtain success with schema. One of which would be that you can use multiple types of schema markup on a page. However, if you markup one element on a page such as product and there are other products listed on the page, you must make sure to markup those elements as well. You also do not want to markup content that is not visible to visitors. For example, if you markup reviews, make sure those reviews are actually visible on the page. Markup reviews should not be written by the business. Google also asks that you markup each duplicate version of a page, not just the canonical version of it. Structured markup should be an accurate reflection on your page. Make sure you provide original and updated content on your structured data pages. Try to use the most specific type of schema markup for your content.

At times, sites may have multiple pages of similar content. The rel=“canonical” was invented to iron out the complications that may come along with duplicate pages by helping search engines better index the preferred version of the content and not its duplicates. It tells them where the master version of a piece of content is located. You are essentially saying, “Hey, search engines, don’t index this content, index this one instead”. Proper canonicalization ensures that every unique piece of content on your website has only one URL. Google recommends having a self-referencing canonical tag on every page on your site to prevent improper or inaccurate indexing.

Some misconceptions may arise from these optimizations, like the idea of distinguishing between content filtering and content penalties. When Google crawls your site and finds duplicates of a page, it will choose one canonical version to index and filter out the rest. But this does not mean you have been penalized.

It is also highly common for websites to have multiple duplicate pages due to sort and filter options. These might also have what’s called faceted navigation that allows visitors find exactly what they’e looking for. This would most commonly be found in e-commerce sites.

SEO is as much about people as it is about the search engines themselves. After all, search engines exist to serve searchers. This goal explains why search engines reward the sites that provide the best possible experience for searchers. This may also explains why some sites with a robust backlink profile may not rank as high as a site that is revolved around the user’s experience.

Considering the fact that nearly half of all search traffic comes from mobile visitors, it should be clear that all websites should be optimized accordingly. You want your mobile visitors to have an easy and enjoyable experience on desktop as well as mobile devices. In 2015, Google rolled out an update that would weigh websites that were optimized for mobile devices over those that did not. For this reason, Google recommends a responsive web design.

As a high percentage of web traffic comes from mobile users, responsive web design is a critical element to apply in your code. Responsive websites are designed to fit whatever screen size your visitors are using. You can use CSS to carry out the incorporation and make website’s “respond” to whatever device size is being used. To test to see if your website is optimized appropriately for mobile, you can use Google’s mobile-friendly.

Speed is another crucial aspect that search engines use to evaluate their rankings. AMP stands for accelerated mobile pages and is used to deliver content to mobile visitors at speed much greater than with non-AMP delivery. AMP is able to deliver content at such high speeds because of its ability to use cached versions of websites and a special AMP version of HTML and Javascript.

Google switched over to mobile first indexing as of 2018. With mobile first, Google crawls and indexes the mobile versions of your site. A mobile site with different links in comparison to its desktop version will have their links present in its mobile version be indexed and used to pass link equity. If you would like to see if your site has been selected for mobile-first indexing, you can check through Google Search Console. Mobile versions and desktop versions of your site should contain the same data along with the same structured and meta data as well. Some other tips that you can apply for a better mobile version of your site is to not use flash, setting your viewpoint correctly, making the font size large enough, and making sure buttons are not too close together as to allow for easier click via touch.

Google prefers to serve content to users in highly fast speeds. Tools that you can use to help with your website’s search speed would be:

- Google’s PageSpeed Insight Tool

- GTMetrix

- Google’s Mobile Website Speed and Performance Tester

- Google Lighthouse

- Chrome DevTools

Images tend to be one of the main culprits of slow page speeds. In addition to compression, adjusting alt texts, submitting image sitemaps, and choosing the right format, there are some other ways you can alter the way in which you present images to your users.

SERSET allows one to have the ability to display multiple versions of your image depending on different situations. This piece of code is added in the image tag to provide various versions of an image for specified-sized devices. This not only provides faster load times but also enhances the experience of the user by providing optimal images for device they are using to view your page. To better utilize something like this, you should be aware of the number of image sizes in regards to devices. It is commonly thought of that there are only three image sizes for devices which would be desktop, mobile, and tablet. However, there are a large variety of other screen sizes and resolutions.

The application of “lazy loading” occurs when you go to a webpage and instead of seeing a blank white space where the image was supposed to be, you see a colored box or a blurry lightweight version of the image while the surrounding text loads. After a few seconds, the image will load in its full resolution. This also helps optimizing your critical rendering path.

Condensing and bundling files can open up space and free up opportunities to increase the speed of a site. Minification occurs when you condense code files by removing things like line breaks and spaces, as well as abbreviating code variable name wherever possible. Alternately, the process of bundling combines a bunch of the same coding language files into one single file. For example, a bunch of Javascript files can be combined into one single file to reduce the amount files for the browser to load. By using both minification and bundling, you can boost your page speed and reduce the number of files to your HTTP request.

Link Building

Creating content that people are searching for, that answers their questions, and that search engines can understand are all important factors. However, those factors alone do not mean that the site will rank. To outrank the rest of the sites with the same qualities, you must build authority. You can build authority by earning links from authoritative websites, building your brand, and nurturing an audience who will help amplify your content.

Inbound links, also known as backlinks or external links are HTML hyperlinks that are coming from a different website pointing to another. They can be thought of as the currency of the internet as they act a lot like real-life reputation. Since the 1990s, they have been used by search engines to dictate which websites are important and popular by acting like votes. Internal links or links pointing to another page within your own websites behave very similarly. A high amount of internal links pointing to a particular page on your website will signal to Google that the page is important, so long as it’s done in a natural and non-spammy way.

So, how do search engines evaluate all those links? Google’s Search Quality Rater Guidelines put a great deal of importance on the concept of E-A-T. This acronym stands for expert, authoritative, and trustworthy. Sites that follow these standards tend to be seen in a higher value by search engines and rank well in the SERPs. Creating a site that is considered expert, authoritative, and trustworthy should be your primary focus in your SEO practice.

Links coming from authoritative and credible sources will carry more weight and value. For Example, a site like wikipedia has thousands of diverse sites linking to it. This indicates that it provides a lot of expertise, has cultivated authority, and is trusted among those other sites. To earn trust and authority with search engines, you’ll want to be on the look out for websites that possess the principles behind E-A-T and put out the effort to try and attain links from them.

User intent refers to the main reason or desire behind a user’s query. In some cases, a user’s intent is much more clear than others. For example, the query “snowboarding” may not identify exactly what the user is searching for. Is he looking for snowboarding videos? Is he looking for snowboarding lessons? Is he looking to buy a snowboard? However, a query like, ‘snowboarding lessons near Big Bear Lake” carries a much more clear intent. Your goal should be to craft content that satisfies the user’s intent.

It is worth noting that there are two different types of links to be concerned of when discussing link building. These would be nofollow links and dofollow links. Nofollow links are links that do not carry any link equity. They tell the search engines not to follow the link to the destination page. Some search engines may still follow the link but will not pass over any link equity. These may be used in situations where the site owner wants to link to an untrustworthy source or if the link was paid for. They may also occur if the link is created by the owner of the destination page, like if a link placed in the comment section of a page.

Dofollow links may look like: Click Here

Nofollow links may look like: Click Here

If follow links pass all the link equity, shouldn’t that mean you want only follow links?

This would not necessarily be the case. A healthy balance of dofollow and nofollow is a favorable attribute as it would demonstrate a much more natural condition of your site’s link profile. A nofollow link may not pass link equity but may accompany opportunities for an increase in web traffic being delivered to your website and may also potentially lead to achieving follow links.

Your link profile is an overall look at all the backlinks your site has earned. These would include the total number of links, their overall quality, their diversity and more. Link diversity would refer to the concept of whether one site is linking to you a hundred times or a hundred sites linking to you one time.

A healthy link profile is one that indicates that your links are coming from authoritative websites and they were earned fairly. Artificial links go against Google’s terms of service and can get a website deindexed.

Sometimes, if not most time, when a website mentions your brand, they will do so without linking to it. Use a web explorer tool to discover these mentions and reach out to the publishers to attain the proper links.

Links that come from similar or relevant websites that discuss identical topics are much better links to acquire. Linking domains don’t have to match the exact topic that your page presents, but they should be somewhat relevant to your subject matter. Evidently, links from irrelevant websites can send confusing signals to search engines about what your website is about. It would be better use of your time to pursue backlinks coming from places where it would make more sense in relation to your site rather than ones that are off-topic.

The anchor text of a link helps tell Google what the topic of the linking page may be about. If dozens of links point to a page with a variation of a word or phrase, the website would have a higher chance of ranking for that word or phrase. However, make sure not to go overboard with it. Too many links with the same phrase or word may start to seem like you are trying to manipulate your site’s ranking and seem spammy. A good rule of thumb is to aim for relevance and avoid spam.

Your fundamental goal regarding link building should not necessarily be about search engine rankings. You should instead focus on building links and relationships that may send over quality forms traffic to your website. This would be another primary reason why you should seek to acquire links from relevant sources.

Something to avoid while cultivating your collection of links is to use spammy link tactics which may be identified by sneaky, unnatural, or otherwise low-quality ways. Google will penalize those with sites that hold spammy link profiles. Buying links or participating in improper link exchanges may seem like an easy method to boost rankings but may in fact put all your hard work at risk. A good note to remember is that Google wants you to earn links, not build them.

- Purchased Links – Both Google and Bing look to discount the influence of paid links. While they may not know exactly which links were paid for and which were organically earned, they may be able to find out by examining certain patterns and clues that indicate the sign of paid links.

- Link Exchanges / Reciprocal Linking – It is acceptable and even encouraged to partake in link exchanges between well affiliated partners and people. However, it is the action of link exchanges on a mass scale or unaffiliated partners that may warrant penalties .

- Low-Quality Directory Links – There are a large number of pay-for-placement web directories that exist to serve the action of link building. The ones you want to watch out for are the low-quality ones that tend to all look similar.

If your site does receive a manual penalty, there are steps you can take to get it lifted.

Links Should Always:

- Be earned / editorial

- Come from authoritative pages

- Increase with time

- Come from topically relevant sources

- Be strategically targeted or naturally earned

- Be a healthy mix of follow and nofollow

- Bring qualified traffic to your site

- Use relevant, natural anchor text

Find Customer and Partner Links

A strategy you can do is to send out partnership badges or offer to write up testimonial of their products. These offer the target something to display on their website with links back to you.

Publish a Blog

Blogging has been one of the primary formats that people have used to provide content and information. Blogs have the capability to provide consistent content on the web, spark conversation and discussion, and earn listings and links from other blogs. As valuable as they may be, you still want to avoid low-quality guest posting just for the sake of link building.

Creating Unique Resources

Crafting content and resources in a thorough and compelling manner has the potential to be widely shared. Engaging and high value content can contain the traits such as: elicits strong emotions (joy, sadness, eagerness, etc.), presenting something new, or at least communicated in a new way, visually appealing, addresses a timely need to an interest, or location-specific (ex. the most searched for burger spot in the city). Creating pieces that hold these traits is a great way to attract and build links. You can also go through the route of creating highly-specific content pieces that target a handful of websites.

Build Resource Page

Resource pages are a great way to build links. However, to find them you’ll want to know some advanced Google search operators to make discovering them a bit easier. For example, if you were building links for a company that creates hiking equipment, you could search — hiking intitle: “resources” — which would bring up a list of websites that mention hiking in their resource page title. They may be good link targets. This can also give you great ideas for content creation. Just think about the types of resources you could create that these pages would all like to reference and link to.

Get Involved in Your Local Community

For a local business, community outreach can result in some of the most valuable influential links. Some methods you can use to access these avenues would be to engage in sponsorships and scholarships, donating to worthy or local causes and joining business associations, posting jobs and offering internships, promoting loyalty programs, running a local competition, developing a real-world relationship with related local business, or hosting or participating in community events, seminars, workshops, and organizations.

Link Building for Local SEO

Building linked unstructured citation are references to business contact information for a non-directory platform like a blog a news site. This would be highly effective in improving the rankings for local SEO. It is also a great way to build links if you are doing local marketing.

Refurbish Top Content

Sometimes there is no need to create new content and take the time to write up a fresh piece. An alternate strategy would be to take effective content and refurbish it for other platforms such as, YouTube, Instagram, Quora, and more. This would assist in expanding your acquisition funnel beyond Google. You can also update older content and simply republish it on the same platform. If you found that a few authoritative sites have linked to a particular page on your site, you can simply update the content on the page and let other industry leaders know about it, it may earn you a link. You can also use the same method with images. If a website has used your image without linking back to you, you can ask if they would not mind including a link back to your website.

Be Newsworthy

One of the most effective and time-honored ways to earn links is by gaining the attention of the press, media, bloggers, and news outlets. Sometimes this can be achieved by releasing a new product, stating something controversial, or giving away something for free. Since so much of SEO is to create a high value digital representation of your brand, to succeed in SEO, you have to be a great brand.

Be Personal in Email Outreach

The most common mistake new SEOs make when trying to build links is by not delivering personal or valuable outreach messages to their targets. Your primary goal for the initial outreach email is to simply get a response. Make it personal by mentioning something the person is working on, where they went to school, their dog, etc. Then provide value. Let them know about a broken link that may be within their site or a page that isn’t working properly. Keep short and simple. Finally, within your initial outreach message, ask a simple question. Typically not for a link, you’ll want to build rapport first.

Measure Your Success to Prove Value

Earning links is resource-intensive, so measure your success to prove value. The metrics for link building should match up with the site’s overall KPIs. These might be sales, email subscriptions, page views, etc. You should also evaluate DA/PA, the ranking of desired keywords, and the amount of traffic to your content.

Total Number of Links

Tracking the growth of the total number of links to your page is one of the most effective ways to measure your link building efforts. This would show you if your link building efforts are in a positive swing. In addition, link cleanup may also be necessary. Some SEOs not only need to build good links but also get rid of bad ones. If you are performing link cleanup while simultaneously building good links, just know that a stagnating or declining “linking domains over time” on the graph is normal.

Questions to Consider if Backlinks Campaigns are Not Going Well

Did you create content that was 10x better than anything else out there? – If your link building efforts fell flat, it may have been for the fact that your content was not substantially better than the competition.

Did you promote your content? How? – Promotion may be arguably the most difficult part of link building but it is what will drive the needle forward.

How many links do you actually need? – In keyword explorer’s SERP analysis report, you can view the pages that are ranking for the term you’re targeting, as well as how many backlinks those URLs have. This may give you a good benchmark to how many links you might need to rank and which websites to target.

What was the quality of links you received? – A link from an authoritative source is much better than ten from low-quality sources. Keep in mind quantity isn’t everything. When targeting websites, you can prioritize them by how authoritative they are using domain authority or page authority.

A lot of the methods you would use to build links would also indirectly help your build brand. You can view link building as a great way to increase brand awareness, the topics you are an authority on, and the products and services you offer. An additional step you can take to amplify this process would be to utilize sharing your content on social media. Sharing your content on social media will not only make your audience more aware of your content but it may also encourage them to share it within their own network. Social shares can also promote an increase in traffic and new visitors to your website, which can grow brand awareness, and with growth in brand awareness comes a growth in trust and links.

The different ways you come to trust a brand:

- Awareness ( you know they exist )

- Helpfulness ( they provide answers to your questions )

- Integrity ( they do what they say they will )

- Quality ( the product or service provides value even after you’ve gotten what you needed )

- Continued Value ( they continue to provide value even after you’ve gotten what you needed )

- Voice ( they communicate in unique, memorable ways )

- Sentiment ( others have good things to say about their experience with the brand )

Having a healthy review portfolio among your business can be exceptionally beneficial to its success. With that said, you want to be cognizant of how you are collecting your reviews and where they are coming from. There are several rules of thumb you should follow in order to make sure your reviews are appropriately being acquired.

Never pay an individual or agency to create a fake positive review for your business or a fake negative review of a competitors. Don’t review your own business or the businesses of your competitors. Never offer incentives of any kind in exchange for reviews. All reviews should be left by the customer in their own accounts; never post reviews on their behalf. Don’t set up a review station or kiosk in your place of business; many reviews stemming from the same IP may come across as spam. Read the guidelines of every review platform you choose to work with.

Playing by the rules and offering exceptional customer experience is how you can be sure to build trust and authority among your field. Authority is built when brands are doing great things in the real-world, making customers happy, creating and sharing valuable content, and building links from reputable sources.

Measuring and Tracking

One of the characteristics of a professional SEO would be to track everything. This would include conversions and rankings to lost links and more.

While it is important and common to have micro and macro goals in mind, you should establish one primary end goal as it is essential to orchestrate a plan or strategy. You have to have a firm understanding of the website’s goals and or clients needs. Good questions directed at your clients not only helps you strategically target your efforts, but it also shows that you care about the client’s success.

Some client question examples would be:

- can you give us a brief history of your company?

- what is the monetary value of a newly qualified lead?

- what are you most profitable services/products ( in order )?

You want to make sure you goals are measurable because if you can’t measure it then you can’t improve it. You also want to make sure you are specific with your goals, and not water them down with vague industry marketing jargon. Sharing your goals can also be beneficial as studies show that those who share their goals with others have a higher chance of achieving them.

Now that you have decided on one particular goal, you must evaluate and find any additional metrics that could help support your site in reaching its end goal.

Engagement Metrics

How are people behaving once they reach your site? That is the question engagement metrics seeks to answer. Some of the most popular metrics for measuring how people engage with your content include:

- Conversion Rate – This would be the number of conversion (for a single desired goal or action) divided by the number of unique visits. This can be anything from an email sig up to a purchase to account creations. Knowing your conversion rate can help you estimate your return on investment (ROI) your website traffic might deliver.

- Time on Page – How long did people spend on your page? If a long blog is getting low time on page, chances are the content is not being fully consumed. However, it is not bad if a contact page is receiving low time on page, that would be normal.

Page Per Visit